I totally promised there would be video. Embedded, it’s too small to look at, or too big to fit nicely in the page. So you’re jus’ gon’ have to go to Vimeo and watch it there. Right now. Or later.

HackWaterloo! Meet Little Turk!

Five hours of train to Toronto, a 6:00am alarm in Toronto, an hour drive to Waterloo, six furious hours of coding, twelve presentations, impatient waiting through deliberations, and, at last, judges decisions. Then, then.. then! I won Hack Waterloo (!!!), with my project Little Turk.

I wasn’t going to go to Hack Waterloo.

It’s Very Far from Montréal. Particularly for a non-driver (True story, I don’t know how to drive). But I ended up going anyway, thanks to a nudge from Leila (:

As for my project; ideas, sometimes come from unexpected places. I said this in the developer chat-room at work, last week on Wednesday:

“Maybe I should wear my tutu and mask at the hack thing this weekend, instead of on Dress Like a Grownup day <:"

Instead of wearing a tutu and mask to Hack Waterloo; though, it occurred to me that maybe I could do another video-centered hack using clips of myself in a tutu and mask (much more comfortable!). Something else I wanted to do, after using the Idée APIs pretty heavily in previous hack events was to use a broader collection of APIs. Creating a query-interface seemed like a pretty good way to combine the two. So I did!

It presented a video window, with a very small version of myself, sitting in a tutu and venetian mask; with a laptop, hula hoop, map, and a ukulele. There might be other things if you ask the right thing. Okay, there are. Next to the video, was a field for entering commands, and a box for their output.

When you type a query, some very simple language processing takes place; and the video of the Little Turk would react to the query in some fashion, and provide a response. Mostly, she ends up typing (: But if you ask her to look for restaurants (using the Yellow Pages API), she would point at her map until the results had been retrieved, and would then start typing. You could also tell her a colour that you like, and she would find pictures for that colour, from Piximilar (alas, video exists for Dark Turk, who knows about drawing pictures; but the three minute demo time was going to be too short for a narrative with two turks). If you asked about a domain, she would ask PostRank about that domain.

Asking about invoices would get you a smartass remark (:

The video component, I put together using Quartz Composer. If you have the OSX development tools installed, Quartz Composer is lurking in there, somewhere. Roughly, it would look at an XML file to see which clip it should be playing; and play that clip. It also maintained a queue of the most recent 25 frames of video which it combined with the current clip (mostly to smooth out transitions between the different clips with visual noise. But it does also make the video look delightfully creepy).

The query interface was HTML/javascript/jQuery; which isn't exotic at all. The language parsing was more-or-less a tangle of if-statements and imagination. There was also a tiny server-side component that was responsible for updating the XML file when Little Turk needed to start doing something else.

Pictures and video, later (:

HackOTT! 4th Place! My Project! Icarus! It’s Magic!

At HackMTL, I learnt an important lesson: Make something which is very obvious and easy to explain. Coalesce was interesting but so esoteric that I don’t think anyone really followed what it is that it was actually doing. So a photo mosaic seemed like a good choice. Except a photo mosaic isn’t the most compelling thing ever; and so I made a video photo mosaic instead (:

And while I did come in 4th, again (: Leila decided to award me a prize for best use of the Piximilar API. Aww.

I used the iSight camera in my MacBook as a video source for both kinds of mosaic. For the first, simpler kind, you could click anywhere on the video, and the app would show a collection of images that matched that colour. If you clicked a second time, it would show you a collection of images that were half of each colour, and so on (up to 5 colours – at which point the oldest colour is dropped).

For the second kind, it was showing the video as a constantly updating photo mosaic. Having it show a tile for each individual colour that was present would have involved making an enormous number of requests to the colour search API; so instead, I made it so that it would round colours. At the default rendering level, it would round each of the RGB hex values to the nearest 64 – enough resolution to sortof see what’s going on, but a small enough colour set that precaching it isn’t tooo onerous.

But I also made it so you could adjust the colour accuracy! with more accurate colours, the photo mosaic is less complete; but as time goes by, its cache of images can expand to include all of the colours which are shown. I didn’t quite get to making an asynchronous image loader; so for each frame of video-mosaic rendered, it would load one more colour so that it wouldn’t stall too long on a single frame.

A demo, let me show you one:

HackOTT – Icarus from _hristine on Vimeo.

(Uhm. It looks like there’s something weird with Vimeo embedding which is making it show the video for Coalesce twice. If you’re looking at this on my homepage, and this seems confusing, click the link under the video..)

HackMTL! 4th Place! My Project! Coalesce! It’s Magic!

I was fortunate enough to be among the participants at HackMTL on Saturday; an event where a large group of people came together (alone, small teams, and an ensemble cast) to build things using a collection of APIs.

I worked alone d: But I did work on a somewhat unique project!

When I read about the APIs that were going to be available during the event, the piximilar colour search was the API that looked most compelling to me. Rather, having the kind of data that the colour API provides was much more compelling than the other APIs.

The general shape of the thing I wanted to build, was something where a collection of computers would talk to each other in such a way that they could collectively show a colour gradient. My initial intention, was for a neighbouring computer to modify your own colour to be more like itself; however, I had an argument with colour theory and time; so, instead, when a neighbouring computer gives you a colour, that colour becomes the edge of the gradient on that side of your screen instead (:

Essentially, each node in the network has their colour. When you do something, like change your name or your colour, everyone in the network is notified, and has the opportunity to add you to their left, or to their right. If you have added someone to your left and/or right, when you choose a new colour, the person on your left and right will have their mosaic updated with your new colour being the new colour to the left or right.

When you’ve got one person pushing colours to you, you’ll see a colour gradient from your colour to their colour. But if you’ve got people pushing colours on both sides, you’ll see a gradient from the left colour to your colour to the right colour. If you’ve got multiple people pushing colours on one side, the most recent one wins (:

Technically, the client is HTML/javascript/jQuery + jQuery plugins for manipulating HSV colours and growl notifications. The client polls the server each second to see if there are any messages for the current user. Some messages (“Someone is here!”, “Someone is now called Something Else!”) are straight plain text; with remaining messages being delimited_strings_with_useful_information_for_the_client (Telling a client to use a new colour on the right or left; or telling them about a possible new neighbour).

The main image-display area is just a collection of div tags, with appropriate classes for accessing any given cell based on its row and column. Changing an image involved changing the background of one of these div elements.

The images, came from Piximilar, which is an image/colour based search service. I pulled together a simple proxy to route same-domain queries from AJAX requests in the client to the appropriate API calls.

The server is a sketchy-as-hell, simplest-thing-that-could-possibly-work PHP/MySQL thing. I expect that it will stop working in a useful fashion soon, since it’s currently sending around far more data than it needs to, or should (:

The code lives on at https://github.com/hristine/coalesce if you want to peer at how I spent my time, and how it looks underneath (: The client can be accessed at http://www.neato.co.nz/Coalesce/Client; with the caveat that I’m not sure how long the images will be available (and that I’m not sure if an excess of people will cause it to have a bit of a sad <: )

So that's what I spent five hours doing on Saturday. Do you want to see a movie? Of course you want to see a movie!

Over There

On Stories.

– Muriel Rukeyser

– Barbara Kingsolver to Kurt Andersen

YUL-YVR-AKL-WLG-AKL-YVR-YUL

Canada has terrible airport codes that, for the most part, bear no resemblance to the name of the city. YUL? Really?

In any case, I am embarking on an epic voyage in February.

I leave Montréal February 14th, get to Wellington on the 16th (thanks international date line!), spend the 18th and 19th at Webstock, then leave Wellington on the 24th, arriving back in Montréal on.. the 24th. Travel gets weird when you go far.

I imagine there will be some kind of eventfulness and insobriety on the 20th, being that I am not often in New Zealand, and exactly no other reason.

Discoveries in the Field of Macaroni and Cheese

My first discovery, in the field of Macaroni and Cheese, was that my pantry has a distinct lack of macaroni. Or, indeed, any of the short-tube pasta family. This leads to my second discovery: Orecchiette makes perfectly serviceable macaroni and cheese. Although the name seems a little misleading, alas Orecchiette and Cheese just doesn’t have the same ring to it.

My third Macaroni and Cheese discovery is an important one: when faced with a pile of richness, such as comes with Macaroni and Cheese, possibly the very finest accompaniment is pickled red onions. I imagine that it is the case there are many other perfectly wonderful pickles that would also offer the tangy flavour contrast that pickled red onions offer, although it is important to bear in mind just how beautiful and bright they are in contrast to the shades of white in Macaroni and Cheese.

The fourth, is another insight into the making of Macaroni and Cheese: making a roux from duck fat instead of butter is a splendid idea, and works really well. The fifth – if you’re going to add something delicious and porky to it, then it’s going to be even more delicious if you brown it at the start, and allow it to cook in the white sauce.

The sixth discovery I made, is that I really should keep better track of the quantities of ingredients as I cook. The trouble with making this kind of thing by eye, is that next time your eye might lie. So here is a vague sort of a guide.

For making enough for one hungry person, or two people who don’t eat enormously…

- In a large saucepan, start boiling water for the pasta.

- Meanwhile, finely dice one shallot, and roughly chop anything delicious and porky you were thinking about adding (You do not, of course, have to add anything porky, and you could, indeed, add anything your heart desired. Mushroomy things seem like they would be delicious.)

- In a smaller pot, on medium heat, combine the shallots and porky-things with about a tablespoon of duck fat (or butter), and gently cook until it all smells delicious. Around about now, the water for the pasta is probably boiling, so go right ahead and add three’ish handfuls of pasta. You’ll get about the right amount. I trust you. It’ll get a little larger when you cook it, so imagine what your casserole dish would look like mostly filled with cooked pasta, and add about the right amount for that to happen (:

- Add some flour to the smaller pot. Within my recollection, I think I added about a teaspoon and a half; but really I shook the bag at it (carefully) until it looked right (which is not useful at all to anyone who doesn’t know what I mean. And if you already knew, you’d be making up your own Macaroni and Cheese as you went along). If you don’t add enough, you’ll end up with a sauce which is a little too liquidy. If you add too much, however, you’ll end up with something gluey. Be sure to whisk the flour in until there are no lumps. Otherwise doom..

- Once the flour has had a chance to cook, add a cup or so of milk to the pot, and embark on another round of whisking to ensure there are no lumps. Because lumps are not delicious.

- Keep on whisking while you wait for the pasta to finish cooking. It’ll thicken up after a while.

- Then, realise you haven’t done anything about cheese. Grab a wedge of blue cheese from yonder fridge. Chop it up roughly into chunks (about 1cm/half inch bits), and stir into the sauce. At this point, if the pasta hasn’t quite finished, you can turn the element off and allow the sauce to continue to be warmed by residual heat.

- Once the pasta is done, drain, then combine with the sauce. The sides of my saucepan are higher than my casserole dish, so I dump pasta on the sauce, stir to coat, and then transfer to a casserole dish. On top, I added finely grated pecorino, since that’s what lives in my fridge. I hear breadcrumbs are popular (panko, in particular), but breadcrumbs are not something that lives in my pantry, so no breadcrumbs for me.

- Casserole goes into the oven (About 180°C) until it smells delicious and has browned on top. I’m not sure how long it was, I was hungry and in a trance, waiting for my delicious food. It was probably about 15 minutes. However, the kind of time dilation that takes place while you are waiting for pizza to be delivered was taking place, so there’s no way to be sure.

- Once done, let it sit for five minutes before attempting to eat. That gives a chance for the sauce and such to make friends and be more delicious. And if you did try and eat it, you’d go “Ow, my mouth. My burning mouth”. So it’s for the best, really. You’ll thank me.

Then the eating! Don’t forget to add pickles (: They really are a very nice flavour and texture contrast to the carby-cheesy delight that is… Macaroni and Cheese.

(Here’s a funny thing about me and Macaroni and Cheese: For a ridiculously long time I’ve been terrified of making bechamel sauces. To say that I am terrified is to overstate massively, I assure you. It’s less terror and more aware of my incompetence – the times I tried, they turned out horribly. Turns out I was probably adding too much flour all along. Oh well, now I know. For Christmas dinner, I was recruited as chief sous chef. Job number one, the most important job among all jobs I had to do: make four cups of mornay sauce. Oh the horror. As it turns out, it worked out amazingly, and thus, I appear to have inadvertently gained the super power of making bechamel based sauces. Victory for me! I rather suspect that I should make efforts to conquer my other sauce nemesises: mayonnaise and hollandaise.)

♬

Music is the universal language of mankind

– Henry Wadsworth Longfellow

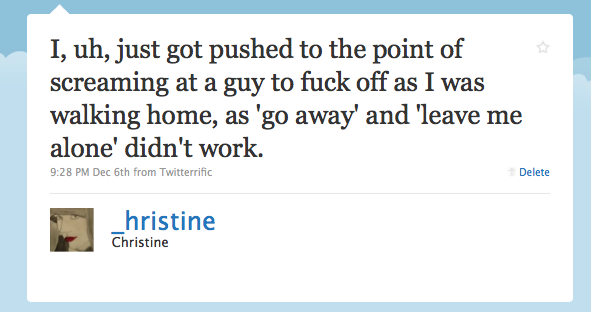

On Being a Woman in the World

This, changes things.

I was walking home from a gig by myself, at not particularly late on a Sunday night. Walking up St-Laurent, someone walking the other way stopped to talk at me. Someone asking for change is more passive. I stopped to listen. They wanted to go get a drink somewhere. I declined, and continued to walk, they continued to talk. Decline, decline, decline, leave me alone.

Still following me, a couple blocks from where I live, people walking on the sidewalk towards us, I said, “Do I need to make a fuss in front of these people for you to leave me alone?”.

Several steps further along, with a group of people milling across the street, I yelled at him to fuck off. Apparently that makes me a bitch. But, really, I feel extremely lucky that name-calling is all he did, and that he walked back away in the opposite direction – because what if he hit me for having the audacity to yell, and to not do what he wanted. You know?

I did get the rest of the way home, uneventfully. Although the saga continues.

Skip forward a couple of days, and I was at dork-Xmas party at a bar. There are many dorks. Who are generally polite. There was also a bouncer who was less polite. A half dozen sentences of small talk, and they had the audacity to squeeze at my belly/waist in a how-ripe-is-this-fruit kind of manner. Bad Touch. That, was a little easier to deal with – filthy look with an “I’m going over there, now”, because, y’know, what could he do about it?

Although when I left the bar, he was standing outside at the front door; and I had a what-if-he-follows-me-home freak out, which doesn’t seem rational.

Skip forward a couple of days, and I was going to be meeting someone at a metro station. I got to the foyer at street level, and there were a bunch of guys milling around. Rather than standing around inside, in case one of them started to bother me, I waited outside, which doesn’t seem rational.

Skip forward a couple of days, and you get to today. I have a ticket to go to concert tonight. And I now have an expectation that by being a woman who isn’t walking around with a guy, that I will be considered fair game, and that unless I am defensive, another man will feel that it’s appropriate to touch or squeeze at me.

I’m not sure what I can do to change that. I am, at a fundamental level, someone who is polite, and nice, and pleasant, and open; and I don’t know how I can become someone who is closed. I do not like being a person who is overcautious to irrationality.

I really do not like being a person who is being evaluated almost entirely on how I look – Neither man who touched me uninvited had any information to suggest that I am the crazy-freaky-interesting person that I am.